Project carried out as part of the FactoryLab industrial consortium with CEA-List, Safran, NAVAL GROUP, Stellantis, SLB and LS GROUP.

Background

The drive to completely automate strenuous or repetitive manual tasks is faced with technical difficulties and implementation costs, which limit its application to large manufacturing runs and repeatable processes. Other contexts such as small runs, custom manufacturing, repair and maintenance etc. are too changeable and require the ability to adapt and analyse the context, which conventional robotic programming solutions such as OLP (Offline Programming) cannot cover. That problem raises the following questions:

- How can operators’ expertise and decision-making ability be preserved, while providing them with assistance in demanding manual tasks?

- How can the robotics system become a tool in the hands of operators, which they can quickly adapt to the particularities of the situation?

Against that backdrop, the goal of the INTUIPROG project is to provide an intuitive and interactive programming solution that can be used across robot makes and models, which is based on the combined use of assistance and automation functions. This solution allows operators and non-robotics specialists to define and start small robotic tasks online. It takes the form of a software suite associated with motion capture hardware.

Challenges & innovation

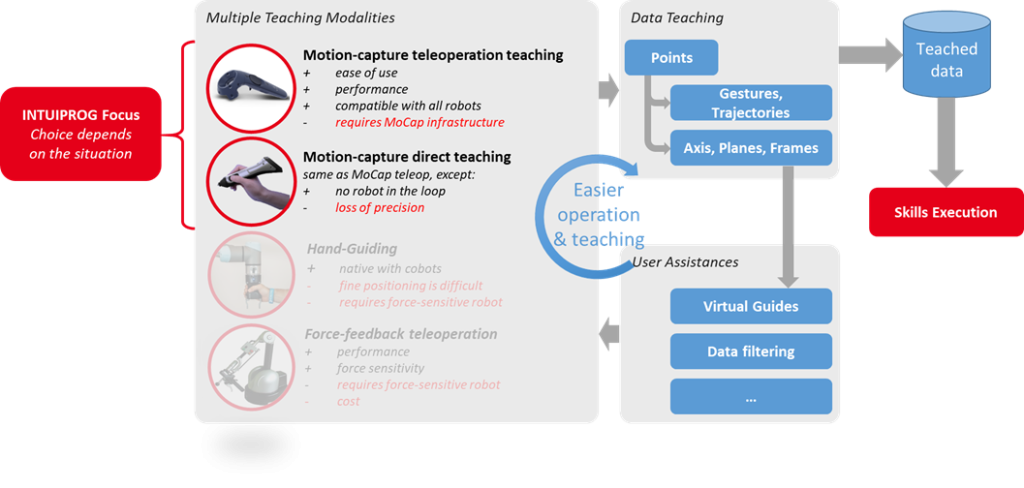

At the core of INTUIPROG is a multimodal teaching-by-demonstration solution for the automatic execution of robotic competencies, including:

- A multimodal flexible teaching module: remote-operated or offline motion capture, force feedback remote operation, hand guiding. Assistance functions (virtual guides, post-processing of taught data) that make demonstration easier.

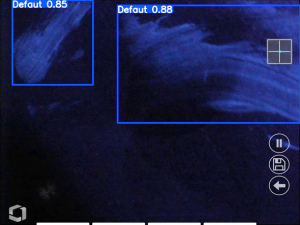

- Framework of competencies, that is to say the robotic functions dedicated to the operator’s trade, for automating small tasks (e.g. cutting tubes, drilling, screwing, sanding surfaces etc.).

- Dedicated graphics interface.

This solution particularly stands out because of the following key points:

- It is intuitive for the operator

- It is compatible with all types of robot (industrial, collaborative, cobots)

- It allows contactless teaching by demonstration (from outside a robotic cell, for instance)

- It allows fast and simple adaptation to process changes

The resulting work flow is illustrated in the following chart. Depending on the context, the operator selects the teaching method (remote operation or direct teaching) that is best suited to their needs. Using motion capture, they easily teach the robot the application points for the competency they want to use. In order to make teaching easier, markers or work planes can be taught and used to defined virtual assistance guides. These guides limit the degrees of freedom of the robot and make remote operation even easier, allowing the operator to focus fully on their task.

Results

- Multimodal teaching via motion capture

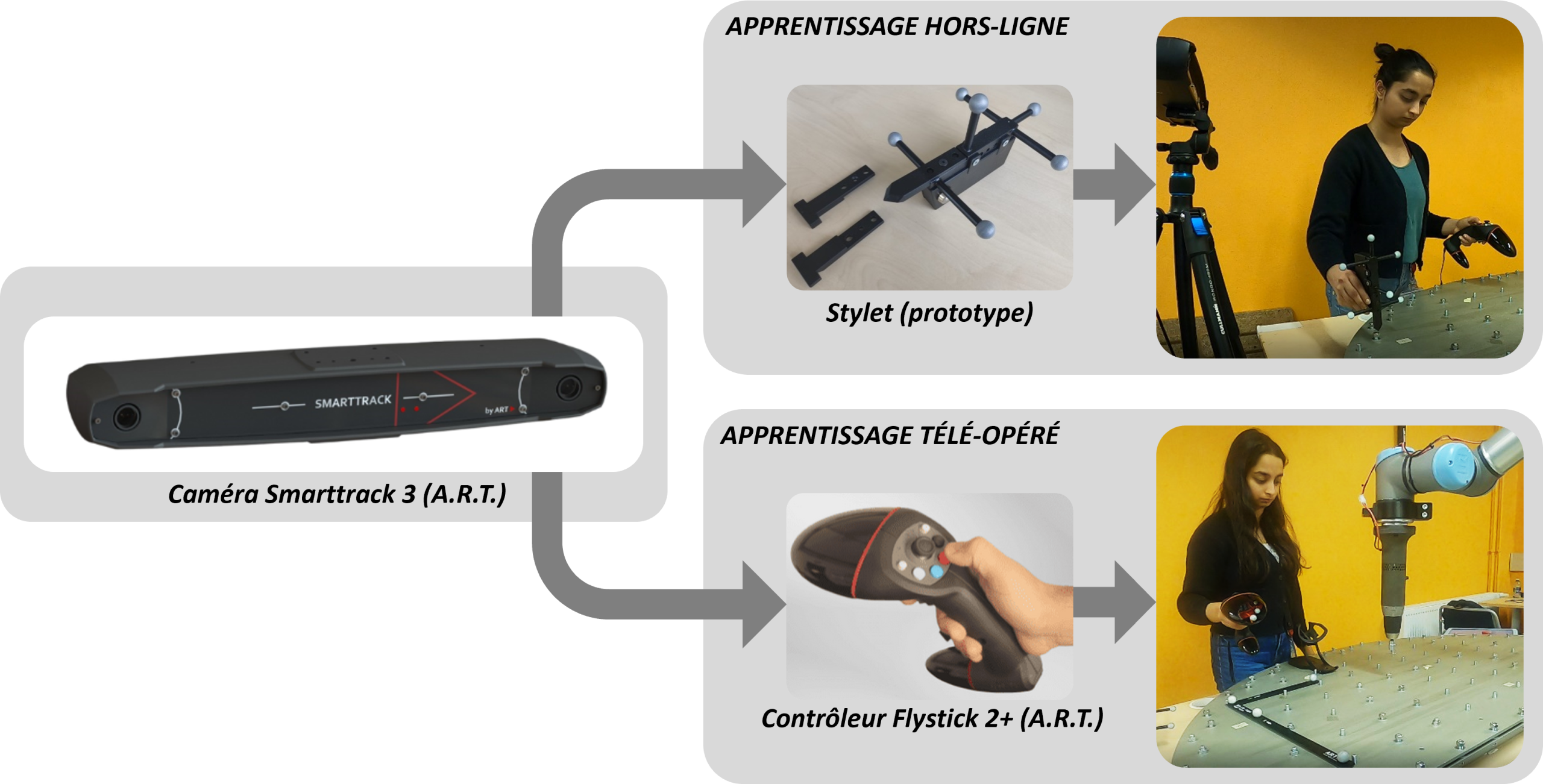

After early experimentation with other equipment (HTC Vive as part of the European MERGING project) we selected equipment made by ART, which is differentiated by its ease of deployment and tracking accuracy. The equipment used includes a pre-calibrated stereo camera, a Flystick2+ remote control for remote operated teaching, and a prototype stylus for direct teaching. ART Also supplies a standard passive stylus (measurement tool), which can be used for the same purpose.

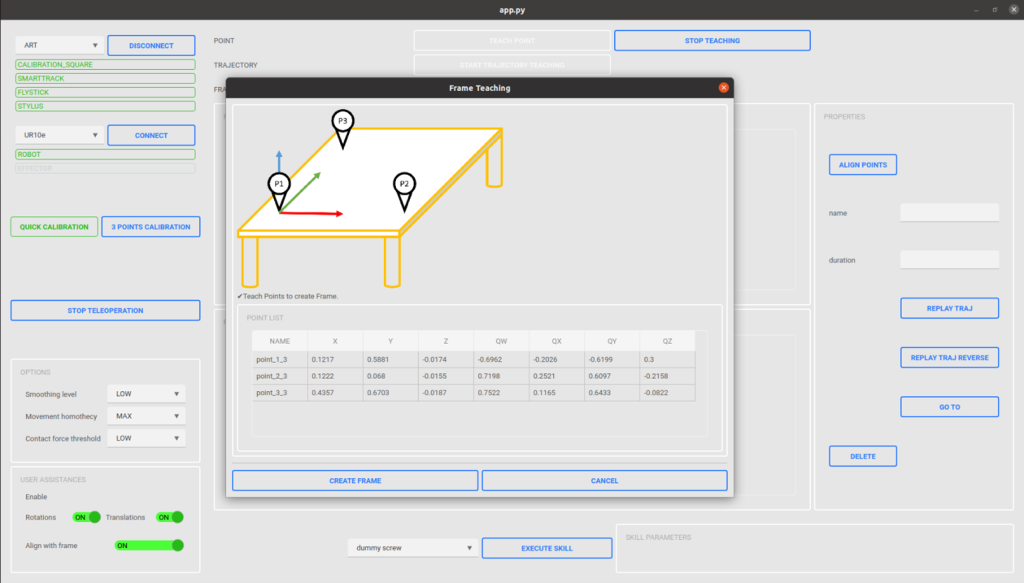

- User interface and assistance functions

Significant effort has gone into the user interface, because its usability has a direct impact on the whole work flow. The interface has been designed to be minimal and easy to use, while providing all the features required for using the system: control and calibration of equipment (robot and mocap), teaching of points, assistance functions and competency executions. The following chart illustrates the interface. The functions (here teaching of markers) are documented and illustrated to make them easier to master.

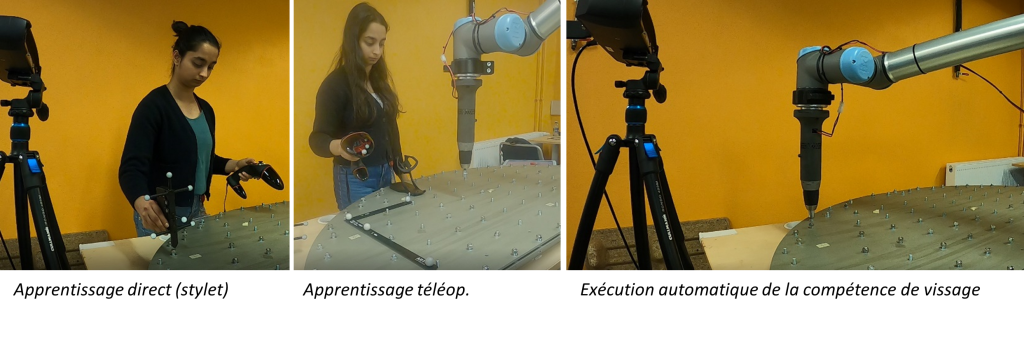

- Demonstration on use case

The solution was implemented and assessed on a use case for screwing supplied by one of the manufacturing partners in the project, and a small-scale model was made. The two teaching methods were implemented and compared.

For direct teaching with a stylus, the operator teaches all the screwing points in the desired order. They use an assistance function to reorient all the learned points perpendicular to the working plane. The screwing competency can then be executed for all the learned points.

For remote operated teaching, the operator starts off by teaching the working plane, in order to activate a virtual guide that blocks the robot on the perpendicular to the plane. All the points can then be taught, and the screwing competency can be executed for the learned points.

Absolute precision is best with remote operation, but both methods result in sufficient precision overall for carrying out the task. In a teaching task with 70 nuts to screw in, the success rate was 98.5% with direct teaching and 100% with remote operation.

| Hand guiding | Direct mocap | Remote op mocap | |

| Accuracy | ± 5mm | ± 3mm | ± 2mm |

| Speed | 6s / point | 2s / point | 6s / point |

| Physical difficulty | Difficult | Very easy | Easy |

| Mental load | High | Minimum | Low/medium |

In general, tests have amply demonstrated the efficiency of mocap for teaching (two to three-fold improvement in time and precision, compared to cobotic teaching using hand guiding). Teaching is also easier, both in terms of physical difficulty (with cobotics, teaching distant points soon becomes tiring), and in terms of mental load (achieving satisfactory precision with cobotics calls for a lot of concentration, due to robot friction).

Prospects

The developments that were initiated as part of the INTUIPROG project are continuing under the European JARVIS project, which focuses on two aspects. The first is the functional development of the remote operation module using motion capture, by improving remote operation performance, coupling with the CORTEX controller and adding a control interface using virtual/augmented reality. The second is the addition of real-time coupling with the SCORE digital twin, which will help improve the possibilities of viewing the data taught in 3D, and benefit from advanced virtual guiding, collision avoidance and path planning functions. The use of the digital twin will also help validate competencies in a simulation before executing them in actual systems.

Author: Baptiste GRADOUSSOFF, INTUIPROG Project Manager, CEA-List.