Project conducted by the FactoryLab industrial consortium with CETIM, Naval Group and SLB.

Background

The presence of pollutants inside industrial piping can disrupt production flows or even lead to failures, when pollutants interfere with an assembly process (gluing or welding). It is therefore important to find a way to detect and treat these problems as early as possible.

Internal inspection of piping using video endoscopy has already been carried out using manual detection in a previous FactoryLab project (GREASE), which revealed the need to automate detection in a video stream. The complex nature of video endoscopy inspection data prompted CETIM to select the implementation of a detection method that uses an artificial intelligence (AI) model.

The main purpose of this study was to develop a solution for the automatic detection of pollution occurring in industrial piping. Such pollution is detected via a method using video endoscopy. Automatic detection relies on an artificial intelligence model.

Use cases and project stages

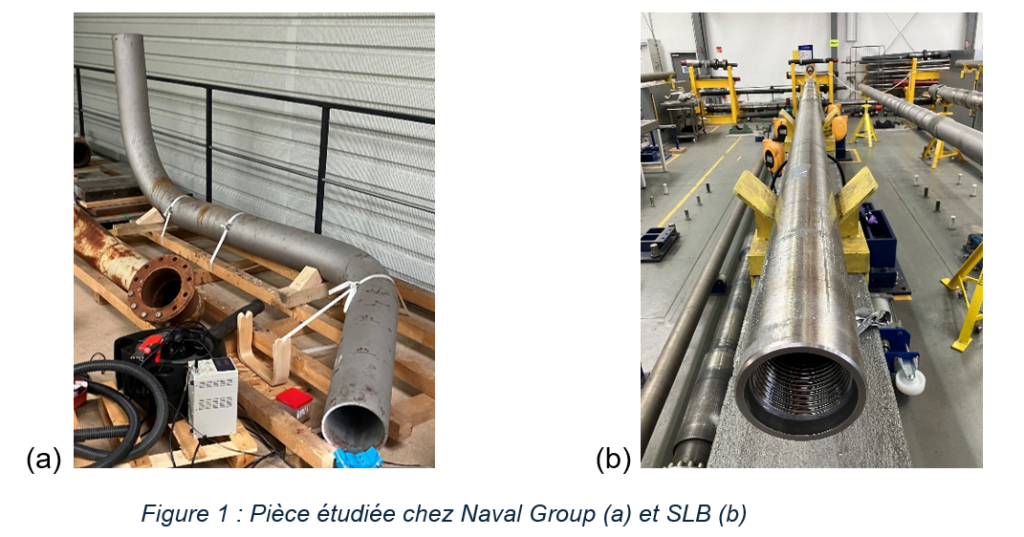

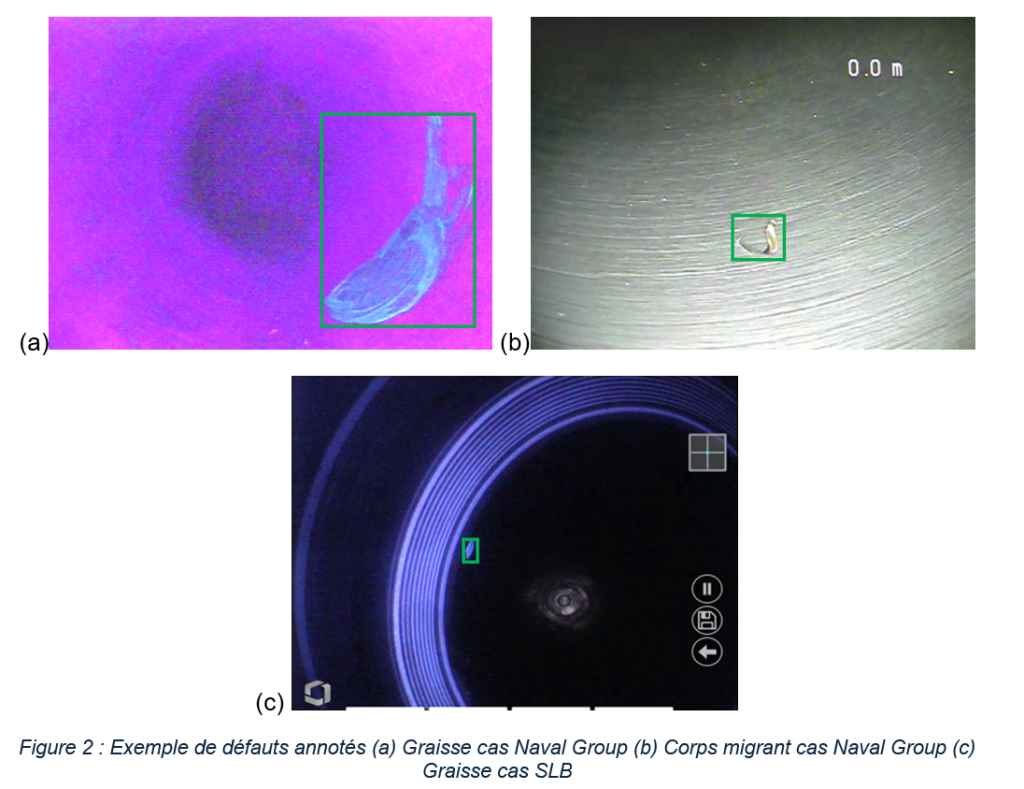

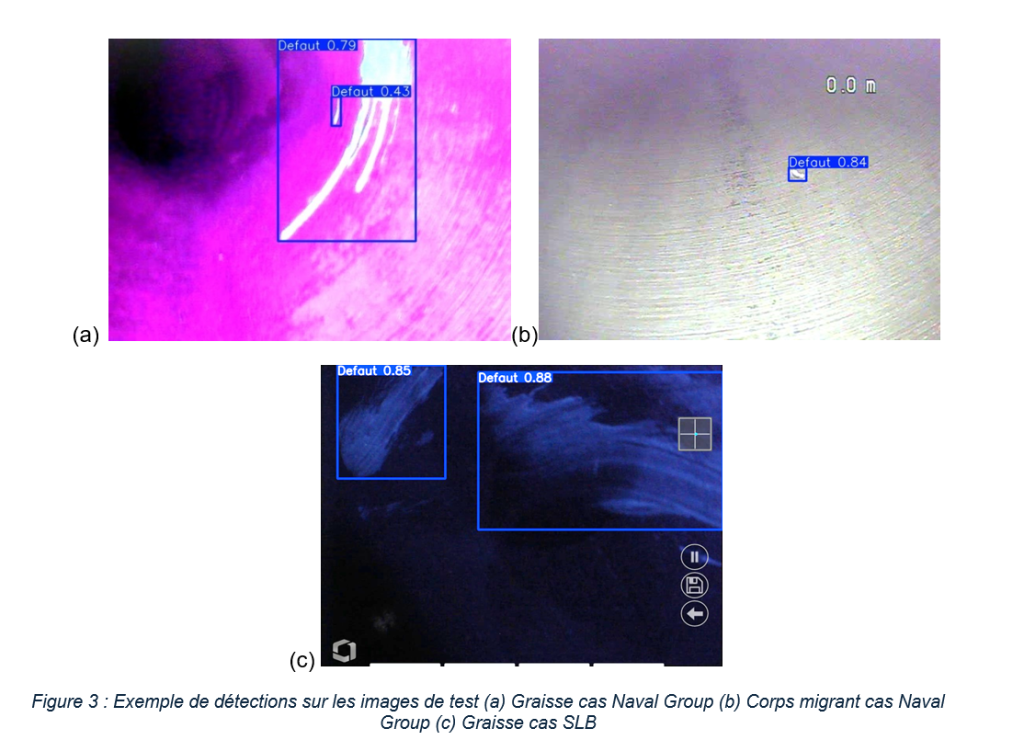

The study was carried out for two use cases: bent steel piping at Naval Group, and collars used by SLB, which are part of the design of underground boring equipment. In both cases, the aim was to detect grease present in the pipe under UV light, while for Naval Group, the ability to detect the presence of loose parts (fishing wire, chips and tools) under white light was also sought.

The first step in the project was to prepare image banks for fixed cases of application, in order to train models. Manual annotation (i.e. indicating where exactly the faults were located) was provided on all the images with indications to train the algorithm.

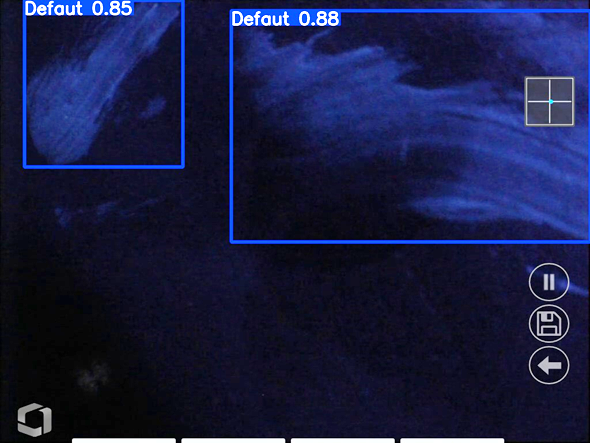

Finally, the algorithms were trained, and integrated into the video endoscopy devices, either directly by carrying out detection on the device (which is not optimal in terms of detection time, because the endoscope used is not powerful enough) or by diverting the video stream to a computer and doing the detection there, which was preferred for tests in actual conditions.

Results

The algorithms trained on the different use cases were then tested on a part of the database that was not used for training purposes, and in actual conditions for Naval Group. The detection results achieved were promising, 80% to 93% of correct detection depending on the use case, with fewer than 10% false positives in defect-free images.

Conclusion & outlook

The study demonstrated the relevance of the approach of using artificial intelligence to detect pollution, as it has made it possible to obtain good results on the different use cases studied, which could help the inspector during their inspection.

During the study, certain areas for improvement were identified: firstly, it would be of use to try to incorporate the algorithm on a more recent and more powerful video endoscope than that used in the project, so as to test real-time detection directly on the device. If that cannot still be done, it would be more suitable, for reasons relating to industrial integration, for detection to be carried out not on a computer, but on a device of the Nvidia Jetson type. Classification of the pollution detected could also be envisaged. Indeed, as of now, all pollution is put in the same class, that of “defect” and more precision could be obtained, with one class per type of defect (e.g. for Naval Group, fishing wire, chip or tool).

Author: Antoine VALENTIN, PRECINET Project Manager, CETIM.